updown.utils.constraints¶

A collection of helper methods and classes for preparing constraints and finite state machine for performing Constrained Beam Search.

-

updown.utils.constraints.add_constraint_words_to_vocabulary(vocabulary:allennlp.data.vocabulary.Vocabulary, wordforms_tsvpath:str, namespace:str='tokens') → allennlp.data.vocabulary.Vocabulary[source]¶ Expand the

Vocabularywith CBS constraint words. We do not need to worry about duplicate words in constraints and caption vocabulary. AllenNLP avoids duplicates automatically.- Parameters

- vocabulary: allennlp.data.vocabulary.Vocabulary

The vocabulary to be expanded with provided words.

- wordforms_tsvpath: str

Path to a TSV file containing two fields: first is the name of Open Images object class and second field is a comma separated list of words (possibly singular and plural forms of the word etc.) which could be CBS constraints.

- namespace: str, optional (default=”tokens”)

The namespace of

Vocabularyto add these words.

- Returns

- allennlp.data.vocabulary.Vocabulary

The expanded

Vocabularywith all the words added.

-

class

updown.utils.constraints.ConstraintFilter(hierarchy_jsonpath: str, nms_threshold: float = 0.85, max_given_constraints: int = 3)[source]¶ Bases:

objectA helper class to perform constraint filtering for providing sensible set of constraint words while decoding.

The original work proposing Constrained Beam Search selects constraints randomly.

We remove certain categories from a fixed set of “blacklisted” categories, which are either too rare, not commonly uttered by humans, or well covered in COCO. We resolve overlapping detections (IoU >= 0.85) by removing the higher-order of the two objects (e.g. , a “dog” would suppress a ‘mammal’) based on the Open Images class hierarchy (keeping both if equal). Finally, we take the top-k objects based on detection confidence as constraints.

- Parameters

- hierarchy_jsonpath: str

Path to a JSON file containing a hierarchy of Open Images object classes.

- nms_threshold: float, optional (default = 0.85)

NMS threshold for suppressing generic object class names during constraint filtering, for two boxes with IoU higher than this threshold, “dog” suppresses “animal”.

- max_given_constraints: int, optional (default = 3)

Maximum number of constraints which can be specified for CBS decoding. Constraints are selected based on the prediction confidence score of their corresponding bounding boxes.

-

class

updown.utils.constraints.FiniteStateMachineBuilder(vocabulary: allennlp.data.vocabulary.Vocabulary, wordforms_tsvpath: str, max_given_constraints: int = 3, max_words_per_constraint: int = 3)[source]¶ Bases:

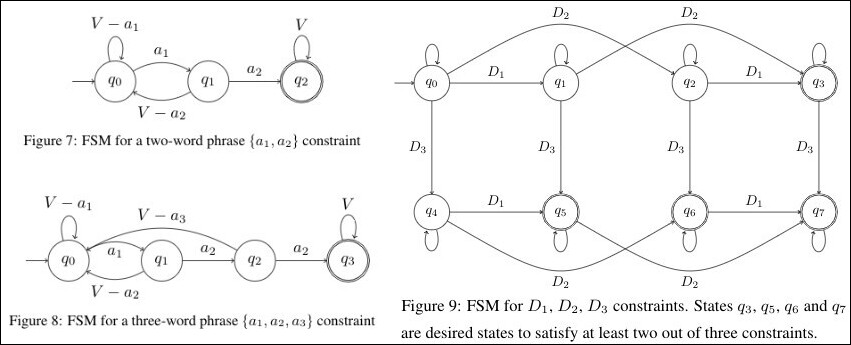

objectA helper class to build a Finite State Machine for Constrained Beam Search, as per the state transitions shown in Figures 7 through 9 from our paper appendix.

The FSM is constructed on a per-example basis, and supports up to three constraints, with each constraint being an Open Image class having up to three words (for example

salt and pepper). Each word in the constraint may have several word-forms (for exampledog,dogs).Note

Providing more than three constraints may work but it is not tested.

Details on Finite State Machine Representation

The FSM is representated as an adjacency matrix. Specifically, it is a tensor of shape

(num_total_states, num_total_states, vocab_size). In this,fsm[S1, S2, W] = 1indicates a transition from “S1” to “S2” if word “W” is decoded. For example, consider Figure 9. The decoding is at initial state (q0), constraint word isD1, while any other word in the vocabulary isDx. Then we have:fsm[0, 0, D1] = 0 and fsm[0, 1, D1] = 1 # arrow from q0 to q1 fsm[0, 0, Dx] = 1 and fsm[0, 1, Dx] = 0 # self-loop on q0

Consider up to “k” (3) constraints and up to “w” (3) words per constraint. We define these terms (as members in the class).

_num_main_states = 2 ** k (8) _total_states = num_main_states * w (24)

First eight states are considered as “main states”, and will always be a part of the FSM. For less than “k” constraints, some states will be unreachable, hence “useless”. These will be ignored automatically.

For any multi-word constraint, we use extra “sub-states” after first

2 ** kstates. We make connections according to Figure 7-8 for such constraints. We dynamically trim unused sub-states to save computation during decoding. That said,num_total_statesdimension is at least 8.A state “q” satisfies number of constraints equal to the number of “1”s in the binary representation of that state. For example:

state “q0” (000) satisfies 0 constraints.

state “q1” (001) satisfies 1 constraint.

state “q2” (010) satisfies 1 constraint.

state “q3” (011) satisfies 2 constraints.

and so on. Only main states fully satisfy constraints.

- Parameters

- vocabulary: allennlp.data.Vocabulary

AllenNLP’s vocabulary containing token to index mapping for captions vocabulary.

- wordforms_tsvpath: str

Path to a TSV file containing two fields: first is the name of Open Images object class and second field is a comma separated list of words (possibly singular and plural forms of the word etc.) which could be CBS constraints.

- max_given_constraints: int, optional (default = 3)

Maximum number of constraints which could be given while cbs decoding. Up to three supported.

- max_words_per_constraint: int, optional (default = 3)

Maximum number of words per constraint for multi-word constraints. Note that these are for multi-word object classes (for example:

fire hydrant) and not for multiple “word-forms” of a word, like singular-plurals. Up to three supported.

-

build(self, constraints:List[str])[source]¶ Build a finite state machine given a list of constraints.

- Parameters

- constraints: List[str]

A list of up to three (possibly) multi-word constraints, in our use-case these are Open Images object class names.

- Returns

- Tuple[torch.Tensor, int]

A finite state machine as an adjacency matrix, index of the next available unused sub-state. This is later used to trim the unused sub-states from FSM.

-

_add_nth_constraint(self, fsm:torch.Tensor, n:int, substate_idx:int, constraint:str)[source]¶ Given an (incomplete) FSM matrix with transitions for “(n - 1)” constraints added, add all transitions for the “n-th” constraint.

- Parameters

- fsm: torch.Tensor

A tensor of shape

(num_total_states, num_total_states, vocab_size)representing an FSM under construction.- n: int

The cardinality of constraint to be added. Goes as 1, 2, 3… (not zero-indexed).

- substate_idx: int

An index which points to the next unused position for a sub-state. It starts with

(2 ** num_main_states)and increases according to the number of multi-word constraints added so far. The calling method,build()keeps track of this.- constraint: str

A (possibly) multi-word constraint, in our use-case it is an Open Images object class name.

- Returns

- Tuple[torch.Tensor, int]

FSM with added connections for the constraint and updated

substate_idxpointing to the next unused sub-state.

-

_connect(self, fsm:torch.Tensor, from_state:int, to_state:int, word:str, reset_state:int=None)[source]¶ Add a connection between two states for a particular word (and all its word-forms). This means removing self-loop from

from_statefor all word-forms ofwordand connecting them toto_state.In case of multi-word constraints, we return back to the

reset_statefor any utterance other thanword, to satisfy a multi-word constraint if all words are decoded consecutively. For example: for “fire hydrant” as a constraint between Q0 and Q1, we reach a sub-state “Q8” on decoding “fire”. Go back to main state “Q1” on decoding “hydrant” immediately after, else we reset back to main state “Q0”.- Parameters

- fsm: torch.Tensor

A tensor of shape

(num_total_states, num_total_states, vocab_size)representing an FSM under construction.- from_state: int

Origin state to make a state transition.

- to_state: int

Destination state to make a state transition.

- word: str

The word which serves as a constraint for transition between given two states.

- reset_state: int, optional (default = None)

State to reset otherwise. This is only valid if

from_stateis a sub-state.

- Returns

- torch.Tensor

FSM with the added connection.